Timo Schick (@timo_schick) / X

Por um escritor misterioso

Last updated 24 outubro 2024

Timo Schick on X: 🎉New paper🎉 In Self-Diagnosis and Self-Debiasing, we investigate whether pretrained LMs can use their internal knowledge to discard undesired behaviors and reduce biases in their own outputs (w/@4digitaldignity + @

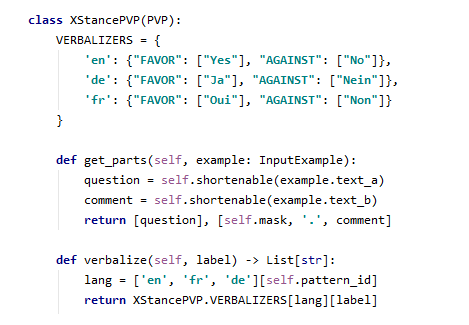

Timo Schick on X: PET ( now runs with the latest version of @huggingface's transformers library. This means it is now possible to perform zero-shot and few-shot PET learning with multilingual models

Michele Bevilacqua (@MicheleBevila20) / X

Timo Schick – Member of Technical Staff – Inflection AI

Timo Schick

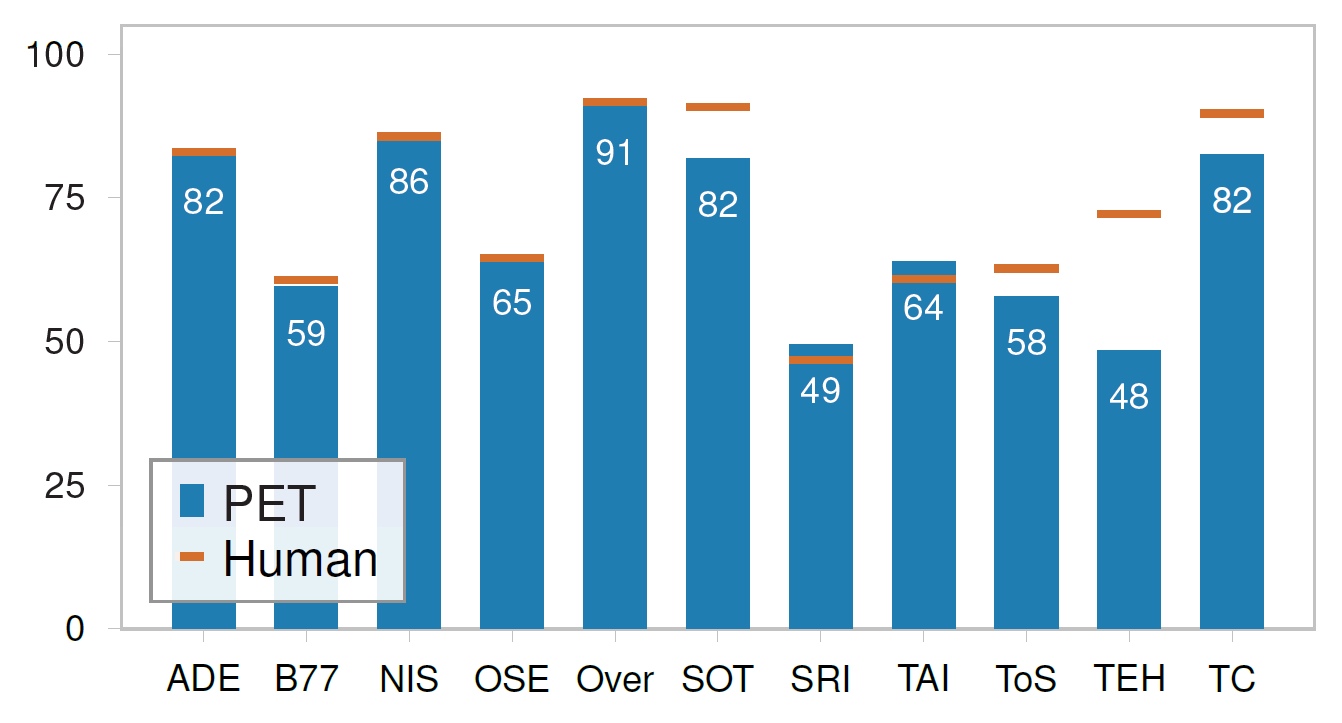

Timo Schick on X: 🎉 New paper 🎉 We show that prompt-based learners like PET excel in true few-shot settings (@EthanJPerez) if correctly configured: On @oughtinc's RAFT, PET performs close to non-expert

Edouard Grave ✈️ NeurIPS 2023 (@EXGRV) / X

Timo Schick (@timo_schick) / X

Timo Schick on X: Interested in distilling zero-shot knowledge from big LMs like GPT-3? Or in learning more about a movie called Bullfrogs on Poopy Mountain? 🐸💩 Check out our blog post

Timo Schick (@timo_schick) / X

Emanuele Vivoli (@EmanueleVivoli) / X

Recomendado para você

-

PodcastBatePapoDigital - FEMAG 202324 outubro 2024

PodcastBatePapoDigital - FEMAG 202324 outubro 2024 -

GammaTechnologies (@GTSUITE) / X24 outubro 2024

-

Lunch date with my trusty Femagene intimate wipes💜 The Femagene Daily Care Range empower me to unapologetically take up space whenever…24 outubro 2024

-

Sorteio Campanha Natal dos Sonhos 2022 – Santana 360 graus24 outubro 2024

Sorteio Campanha Natal dos Sonhos 2022 – Santana 360 graus24 outubro 2024 -

Mola De Topo 5563 Latão Cromado Femag - Duda Ferragens24 outubro 2024

Mola De Topo 5563 Latão Cromado Femag - Duda Ferragens24 outubro 2024 -

Cantora Anne Marie fala sobre a importância dos festivais de música au24 outubro 2024

-

FAMAG24 outubro 2024

FAMAG24 outubro 2024 -

Airton Júnior Vence Femag 2023 » Grupo Folha 12 - Suzano TV24 outubro 2024

Airton Júnior Vence Femag 2023 » Grupo Folha 12 - Suzano TV24 outubro 2024 -

Pin by ~CARO~ on Bitstrips and Bitmoji in 202324 outubro 2024

Pin by ~CARO~ on Bitstrips and Bitmoji in 202324 outubro 2024 -

Empresa - Femag24 outubro 2024

Empresa - Femag24 outubro 2024

você pode gostar

-

Ícaro – Wikipédia, a enciclopédia livre24 outubro 2024

Ícaro – Wikipédia, a enciclopédia livre24 outubro 2024 -

Mandarake メーカー・出版社名一覧24 outubro 2024

Mandarake メーカー・出版社名一覧24 outubro 2024 -

Dragon Ball Super: Broly (Dublado) - Movies on Google Play24 outubro 2024

-

Jaime Caruana, Former Bank of Spain Governor, Joins IESE Faculty24 outubro 2024

Jaime Caruana, Former Bank of Spain Governor, Joins IESE Faculty24 outubro 2024 -

Key & BPM for Sacrifices by Tinashe24 outubro 2024

-

Research Center for Materials Nanoarchitectonics24 outubro 2024

Research Center for Materials Nanoarchitectonics24 outubro 2024 -

Zombi Child' Review: Pre-Fab Cult Movie Plays With Voodoo24 outubro 2024

Zombi Child' Review: Pre-Fab Cult Movie Plays With Voodoo24 outubro 2024 -

Racing signs Kgatlana on transfer from Atlético Madrid - Racing Louisville FC24 outubro 2024

Racing signs Kgatlana on transfer from Atlético Madrid - Racing Louisville FC24 outubro 2024 -

O Direito Bancário é uma das áreas mais lucrativas da advocacia e quem24 outubro 2024

-

Como comprar e resgatar o Tinder Voucher – Codashop Brasil24 outubro 2024

Como comprar e resgatar o Tinder Voucher – Codashop Brasil24 outubro 2024